There have been many articles written about generative artificial intelligence (AI) in insurance, and this exploration of a new, amazing technology is important and exciting. However, the insurance industry’s central business model (if not the entirety of the business model) comes down to a few key decision points: pricing and rating, underwriting risk decision-making, and decisions around claims payments.

I want to focus now on how and whether generative AI might be utilized in an insurer’s underwriting decision-making process. Is this technology something that will provide automation and enhancement around the periphery of an insurer’s business, or will it have a material impact on the core of the industry’s business model?

What Do We Mean by Generative AI in Insurance?

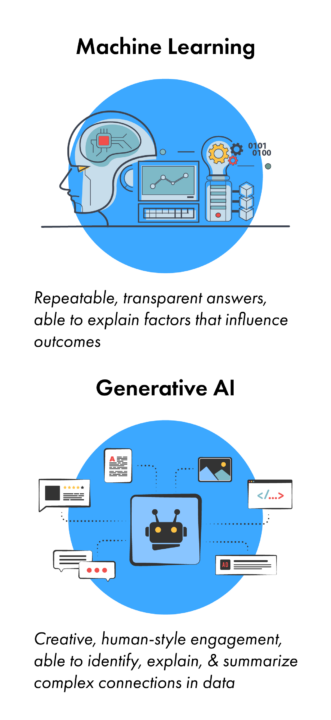

Most use of AI in the insurance industry so far has focused on machine learning for better data analytics. Insurers or vendors use machine learning and AI to analyze large sets of policy and claims data as a way of identifying the significance of key factors in actuarial or risk models, claims severity models, or fraud detection models. This is largely a “design-time” use of AI, meaning that the AI is not used as a run-time or production tool but as something on the back end to help create standard production models (e.g., an updated rate table).

Generative AI’s primary purpose, on the other hand, is to learn from large sets of varied content, including text and images, in order to generate new content. (Hence the term “generative.”) This generated content is meant to be reflective of the training data set without repeating it. Generative AI trained on a library of short stories would be able to produce a similar short story mimicking a specific style without directly copying a story word for word.

Generative AI models like ChatGPT that have been trained on a massive set of human text can then generate realistic and convincing-sounding text of their own when in “dialogue” with a human. When trained on the right content, these types of tools can produce images, code, and video in addition to text.

Which Use Case Applies?

Because of the excitement around generative AI, the topic tends to take over every discussion lately. This means there is a blurring of lines, and articles about generative AI will sometimes start talking about use cases more aligned with machine learning for analytics and predictive modeling. This is further complicated by the fact that this technology has been evolving rapidly, so conversations contain a mix of “What can generative AI do for me now?” with “What might generative AI be able to do for me in the future?” The answers to those questions are not the same.

Because of the excitement around generative AI, the topic tends to take over every discussion lately. This means there is a blurring of lines, and articles about generative AI will sometimes start talking about use cases more aligned with machine learning for analytics and predictive modeling. This is further complicated by the fact that this technology has been evolving rapidly, so conversations contain a mix of “What can generative AI do for me now?” with “What might generative AI be able to do for me in the future?” The answers to those questions are not the same.

Here is a simple rubric:

- Does your use case pose a question that requires a repeatable and transparent answer with the ability to explain the factors that influenced the outcome? Does it require an answer that can be recreated in the future when run against the same data? If so, then you want machine learning for data analytics.

- Does your use case pose a question that requires creativity and human-style engagement? Does it require ability to identify, summarize, and explain complex connections across your data? If so, then you want generative AI.

Does Generative AI Really Apply to Core Underwriting Risk Decision-Making?

Generative AI, as it stands now, attempts to create original responses that share qualities with its training data without repeating the training data directly. If you ask it the same question five times in a row, it will give different answers, possibly just alternate wording, possibly a difference in conclusion — especially if there is existing context in an ongoing conversation. If you ask the same question multiple times with a slight variation in language, generative AI might give very different answers. Subtleties that seem like simple word choices to humans may lead an algorithm down an entirely different path.

In some ways, this sounds familiar. Many insurers have failed to codify their underwriting decisions because of the perceived complexity. This means different human underwriters might come to different decisions about the same submission. Even a single underwriter might come to a different decision on a submission on a different day and time. To be clear, this is not necessarily a bad thing. Context outside of a single submission does matter. Perhaps an underwriter has just approved several policies in a row with a similar specialty risk profile and wants to avoid oversaturating that specific risk category.

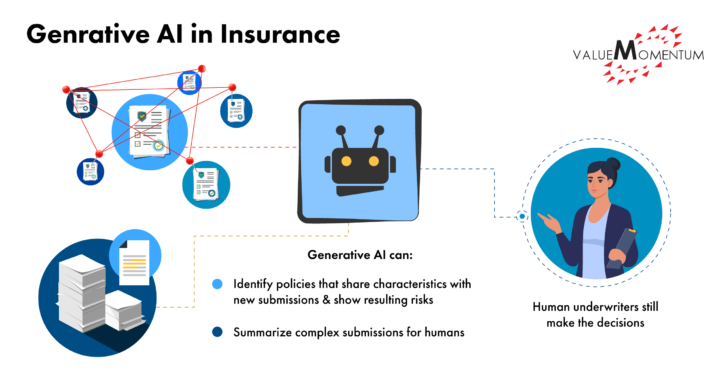

However, even if generative AI could outperform underwriters in decision-making in that fashion, insurers need to move away from unpredictable risk decision-making that can’t be justified in retrospect based on clear metrics and data factors. Based on the above rubric, underwriting risk decisions clearly fall into the “machine learning for data analytics” classification rather than generative AI.

But this isn’t to say that generative AI can’t aid in the process. It can draw powerful connections across its training data, recognizing similarities that humans likely would not. It can identify policies that share characteristics with a new submission and show how those turned out from a risk perspective. For complex submissions with many pages of information, generative AI can summarize the key points in a way that humans can understand. All of this can help a human underwriter come to a decision, much the same way that a predictive score generated from a standardized model can help.

In some ways, predictive models created from machine learning represent a data-based enhancement to underwriters, while generative AI might represent an intuitive-style enhancement to underwriters. While insurers might be comfortable turning over the entire risk decision to the repeatable and transparent predictive model, especially for simpler risks, given its creativity and unrepeatability, generative AI should not be driving underwriting decisions without a human counterpart.

What Does the Future Hold?

As generative AI grows better and better, with more training data, things might change. The reality is there are emergent properties of a technology that can simulate human language and decision-making that we don’t yet fully understand, and they will only grow more capable as the technology improves and the set of training data grows more massive.

Which algorithm can make a better underwriting risk prediction? Is it the model built using specific machine learning formulas run against one insurer’s policy and claims data? Or is it the model trained on one insurer’s policy and claims data plus a massive set of additional information mostly unrelated to insurance but resulting in something that can simulate creative human language and decision-making? Perhaps in the future we will leverage some combination of the two.

To discuss how generative AI is impacting the insurance industry, you can connect with Jeff here on LinkedIn.

To learn more about how to make the most out of your data platforms, check out ValueMomentum’s DataLeverage services.

About the Author:

Jeff Goldberg is an accomplished entrepreneur and tech strategist with a strong focus on driving innovation and growth across industries. With expertise in advising CIOs and tech firms on honing their technology strategy to meet business needs, Jeff excels at bridging the gap between cutting-edge technology and industry requirements. He has extensive insurance industry experience, along with a deep understanding of data analytics, digital strategy, cloud enablement, and innovation. As part of his consulting work, he serves as a fractional/interim CIO for insurance carriers, mostly recently at NIP Group.

Jeff Goldberg is an accomplished entrepreneur and tech strategist with a strong focus on driving innovation and growth across industries. With expertise in advising CIOs and tech firms on honing their technology strategy to meet business needs, Jeff excels at bridging the gap between cutting-edge technology and industry requirements. He has extensive insurance industry experience, along with a deep understanding of data analytics, digital strategy, cloud enablement, and innovation. As part of his consulting work, he serves as a fractional/interim CIO for insurance carriers, mostly recently at NIP Group.